TL:DR – This one is software heavy!

Project MP is really taking shape, whereas I used a Ryzen 3 1200 to install Unraid and begin configuring, the replacement Ryzen 5 3600, 6 core 12 thread CPU has arrived and has been slapped straight into the machine ready for distribution!

Ryzen 5, 16Gb RAM and 3x GPU installed.

Ryzen 5, 16Gb RAM and 3x GPU installed.

Now came the hard part. 3 GPU are installed in the system, an old ATI HD5430, a GT 710 and a GTX 960. Historically NVIDIA GPU have been difficult to work in VMs due to the infamous code 43 error. I’m here to tell you that is no more. I set up a Linux VM with Pop! OS on it, and am duplicating this three times for each player however the process is similar for all OS (inc Windows!)

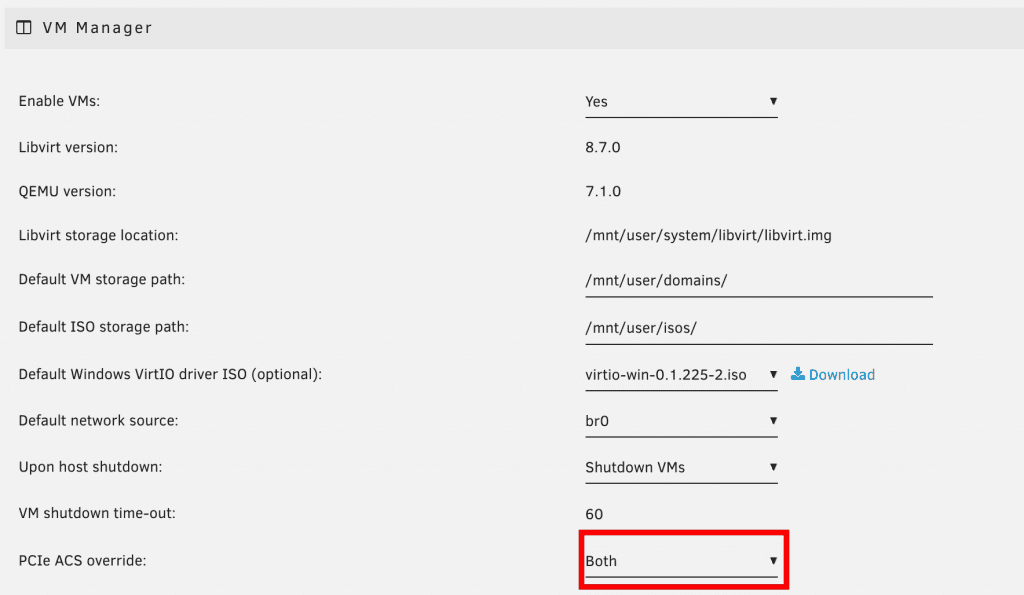

Getting the GPUs assigned along with USB ports was a learning experience that I would like to share. In order to pass through PCIe devices, in my case 3x GPU and 3x USB controllers) the first step is to open the “VM Manager” in settings, and change the ACS override to (in my case) “Both”. This is important in the next step.

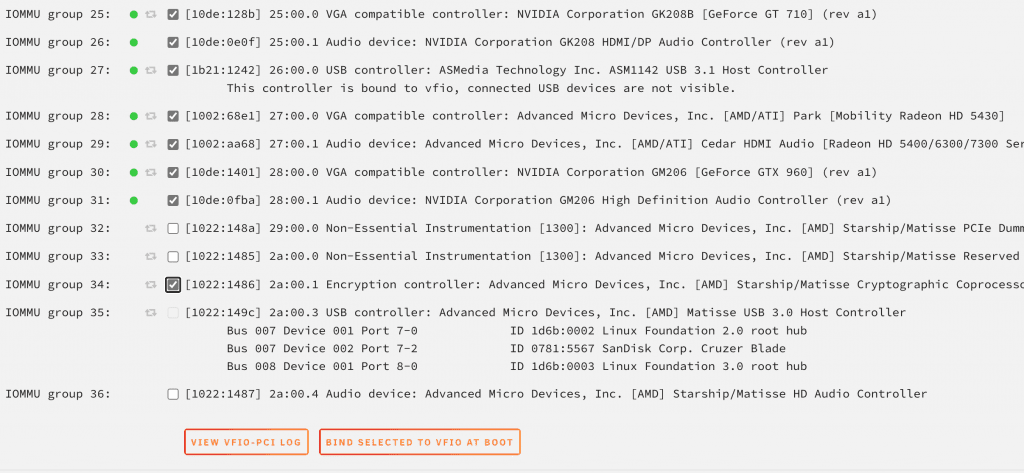

Next, open the “System Devices” tool in the “Tools” menu and you will see your devices have now been split into their individual IOMMU groups (provided you have enabled IOMMU in the BIOS settings of your motherboard!!!)

Here you can tick the devices you want to isolate from Unraid at boot, and click the “Bind Selected to VFIO at Boot” button to finish the isolation of these PCIe / USB devices. This also works for NVME drives and pretty much any other device you want to give physical access to your VMs.

Finally, opening a VM now shows these devices as selectable to be passed through in the VM!

Change the ACS option

Change the ACS option

Select the IOMMU groups

Select the IOMMU groups

Add devices to the VM!

Add devices to the VM!

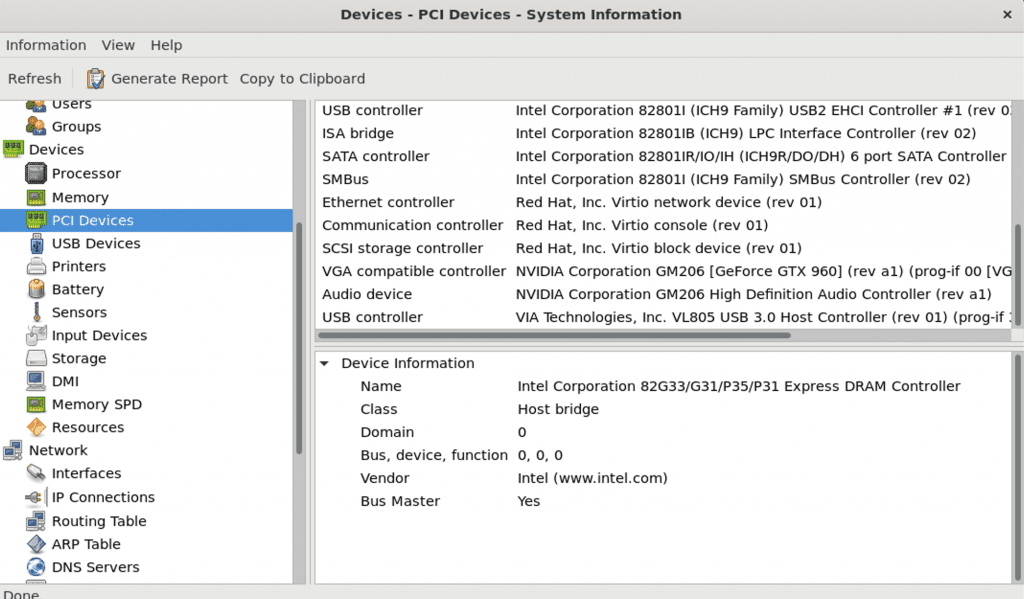

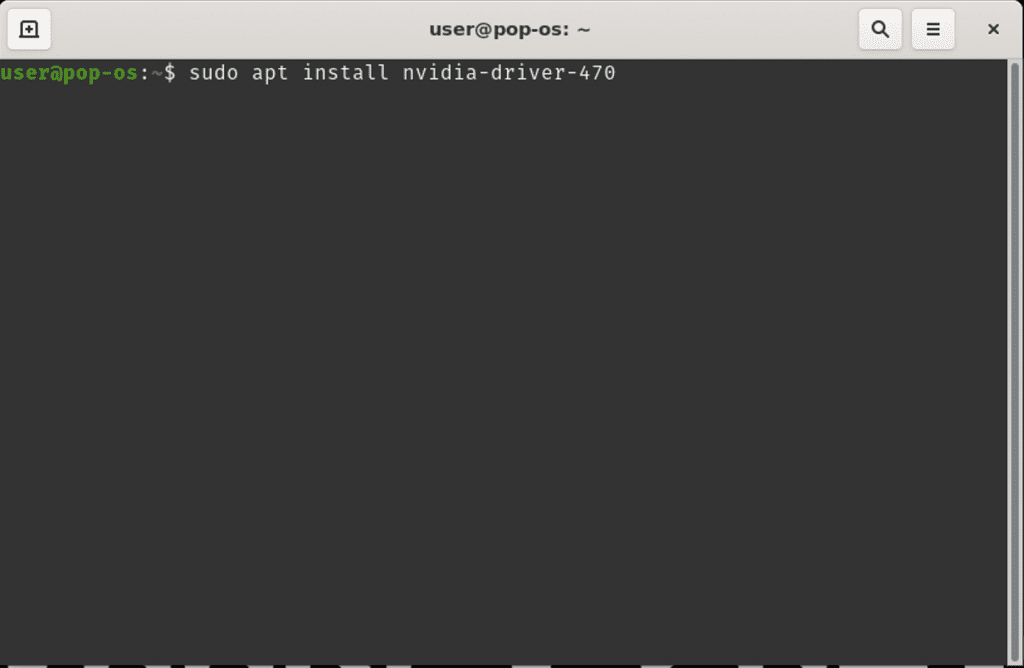

One big problem, as standard I can use VNC to connect to the VM but I can’t use the GPU with a physical monitor yet (Kb&M was working fine!). A quick check in the HardInfo So using VNC, time to open the command line, type “sudo apt install nvidia-driver-470”. I tried this using the recommended driver from NVIDIA first however it didn’t work but this did for me, your mileage may vary.

One reboot later and the GPU was showing in About in the setting app, and the display was showing on my screen!

POP! OS installed

POP! OS installed

GPU recognised

GPU recognised

Installing Drivers

Installing Drivers

Unraid Host, Linux VM – Physical output from a VM to a monitor!

Unraid Host, Linux VM – Physical output from a VM to a monitor!

Thankfully, the NVIDIA driver is fairly universal so I am able to clone the VM drive 2 more times now EmulationStation and OpenArena is installed… and done! Software installed and all 3 VMs physically presented, and controllable and thanks to Unraid’s network bridging are even able to play in there own private LAN when disconnected from the main network!

In the next part I’ll show off the finished build and talk about the next steps for project MP!

Post Views: 104